July 30, 2021

JAX/Flax Week: All the news on the event

By Sofía Sánchez González

The most powerful machines from Google and a mini version of DALL-E! We bring you all the news from JAX/Flax Week, an exclusive event for the Hugging Face community in which our NLP engineer Manuel Romero has of course participated!

In line with its goal of democratizing artificial intelligence, Hugging Face organized an event week from July 7 to 14 to engage and recognize its top users, and thanks to free access to Google’s most powerful machines, projects related to the Natural Language Processing and Computer Vision (CV) were created.

Manu, one of the privileged few with access, has given #TeamNarrativa the scoop!

30 revolutionary NLP projects

All this computing power has allowed for the creation of several innovative projects in the field of NLP worldwide. These are the most outstanding ones:

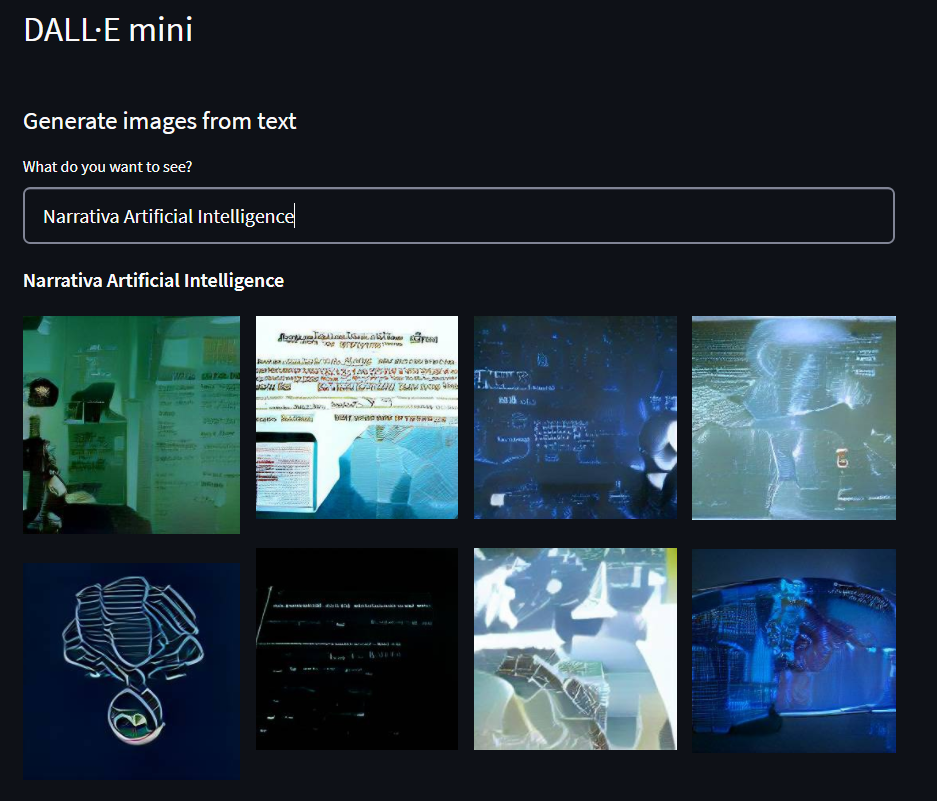

DALL-E – Mini version

Do you remember back in January when we got you caught up on DALL-E, the artificial intelligence model capable of generating images from text? At JAX/Flax, it was recreated!

DALL-E – Mini version

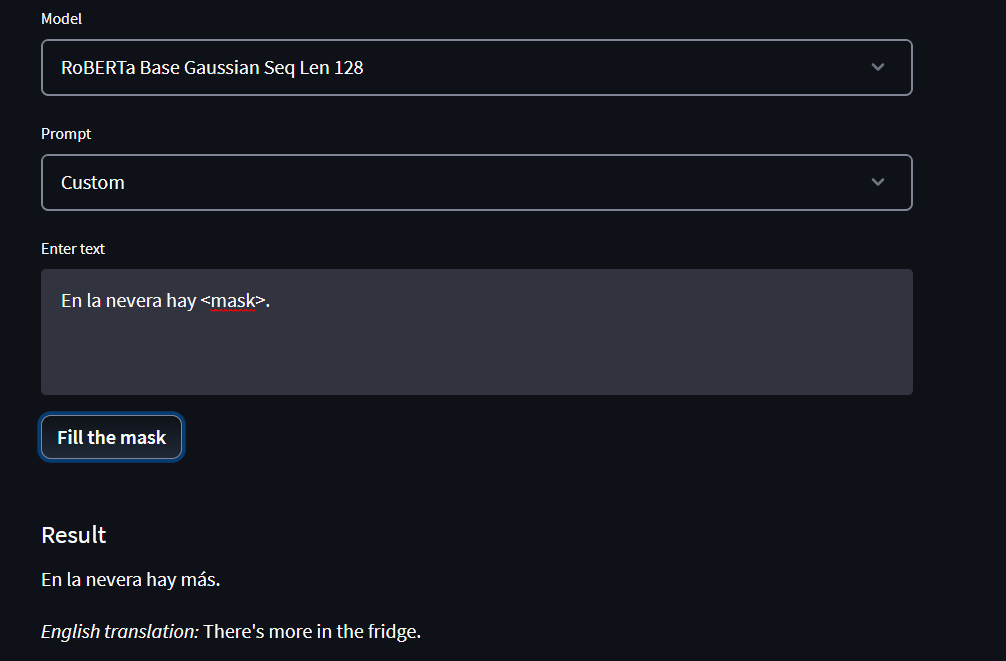

BERTIN: Pre-train RoBERTa-large from scratch in Spanish

Manu participated in this project and he tells us about the progress they’ve made. “Normally, when training a model, we want it to learn the language and how the words are combined. In short, how the language is modeled. In this case we have discovered that the ‘next sentence prediction’ phase is not so important and we have achieved dynamic masking in larger batches.”

So what does that all mean? In Machine Learning it’s essential to contrast what our model has predicted with actual reality and then reward whether it’s been successful or not. RoBERTa-large, one of the most powerful of the models around, was pre-trained from scratch in Spanish.

BERTIN: Pre-train RoBERTa-large from scratch in Spanish

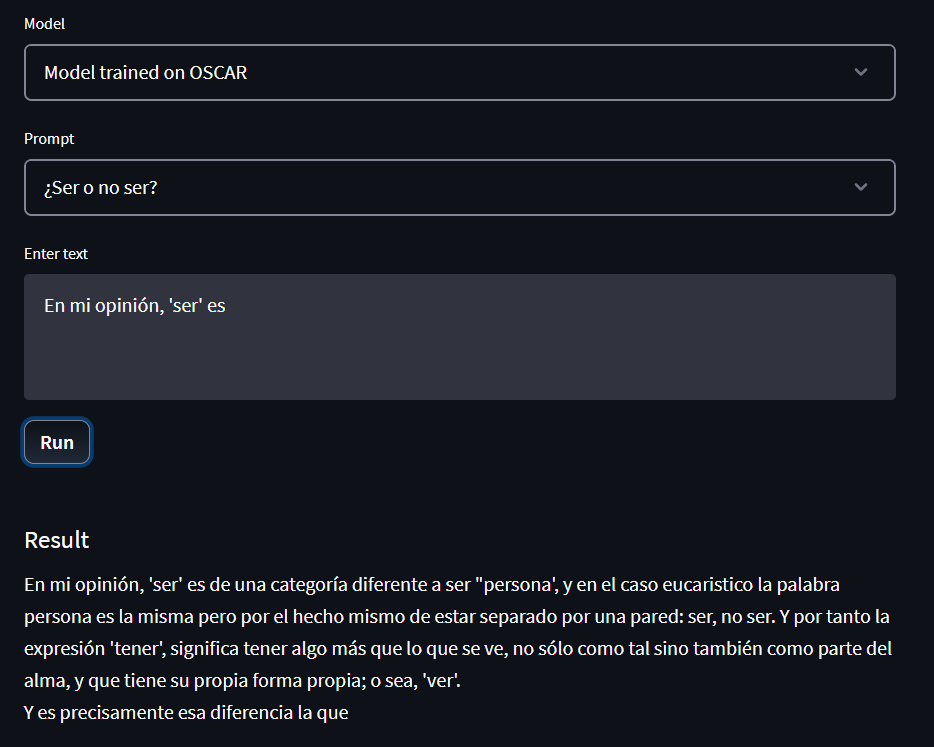

Pre-train GPT2 from scratch in Spanish

We’ve already told you about the GPT model. It first appeared in 2018 and the idea behind its creation was to find a technology that could learn from previously written text. In this way, it would be able to provide different alternatives for the completion of a sentence, saving a lot of work and delivering variability and linguistic richness in the text. The goal was to create a strong language generation model for Spanish… and they sure made it!

Pre-train GPT2 from scratch in Spanish

MedClip – Pre-training CLIP on medical data

Can you imagine being able to provide an image of an X-ray and then have the model tell you what’s wrong with you? This is what MedClip does and more progress was made during JAX/Flax week on its development.

Future of the NLP

As you can see, NLP has a great future! The brightest minds in the world of artificial intelligence and natural language processing always have great ideas. Now we just have to wait for the winners to be announced! Who are you betting on?

Share