August 11, 2021

What is a transformer with regard to NLP?

By Sofía Sánchez González

Have you ever wondered what a transformer is with regard to NLP? Although the Michael Bay movie saga comes to mind, the power of transformers in natural language processing is far greater than that of robot cars.

In this post we’ll explain what a transformer is and what uses it has in real life and in the generation of automatic content. Let’s start with some history.

CBOW and Skip-gram, old news in the NLP world

At first, the most popular architectural models for working with text for many years were CBOW (Continuous Bag of Words Model) and Skip-gram. What does each one consist of?

- CBOW: Within a sentence, the distributed representations of context (or surrounding words) are combined to predict the word in the middle. That is, the first three words and the last three are taken and the middle one can be predicted.

It is a X day.

In training, the CBOW model will be able to guess the hidden word.

It is a nice day.

2. Skip-gram: More powerful than CBOW, it does just the opposite. We have the word in the center and we want to know those on the right and those on the left of it.

OK, but what happens when we have a wider context? These models need to know the words above and do not take the order into account. For example, in the phrase ‘The game started 0-2 for the local team, but then came back and ended up winning 6-2‘. How do you classify it? Positive or negative? It is necessary to take into account that the sentence has changed and that there is a new context.

Solution? Neural networks. But memoryless neural networks have a limitation insofar as they give you an accurate representation for a word. However in practice, the text is a sequence of words. And for tasks such as text classification (sentiment analysis) that are very frequent in NLP, they are not the best option because they do not take into account the order of the words in the sentence/sentence/sequence.

What’s needed then? Let’s take the phrase:

It’s very cold in Moscow today.

We need a mechanism that remembers when we are processing the word ‘Moscow,’ that the words ‘Today’, ‘ago’, ‘a lot’, ‘cold’ and ‘in’ were also said. We need memory.

Recurrent neural networks change everything

Recurrent neural networks (RNNs) process sequences; be they daily stock prices, phrases or sentences, or sensor measurements. One at a time, each of the elements previously appearing in the sequence is retained or memorized (called a state). It does take into account the order of the words.

But they are not perfect yet… They suffer when sequences are too long and hard to train.

What is a transformer?

And we come to the question at hand! What is a transformer with regard to NLP?

A transformer is a learning model that adopts the attention mechanism, differentiating the importance of each part of the input data. It is used primarily in the fields of natural language processing and computer vision.

Basically, a transformer is the best of the best.

- You can train sequences as long as you want and it’s as fast as a bullet (in this it does look like the cars from the Transformers movies);

- Training time is reduced;

- It’s able to identify relationships between the elements of the sequence no matter how distant they are.

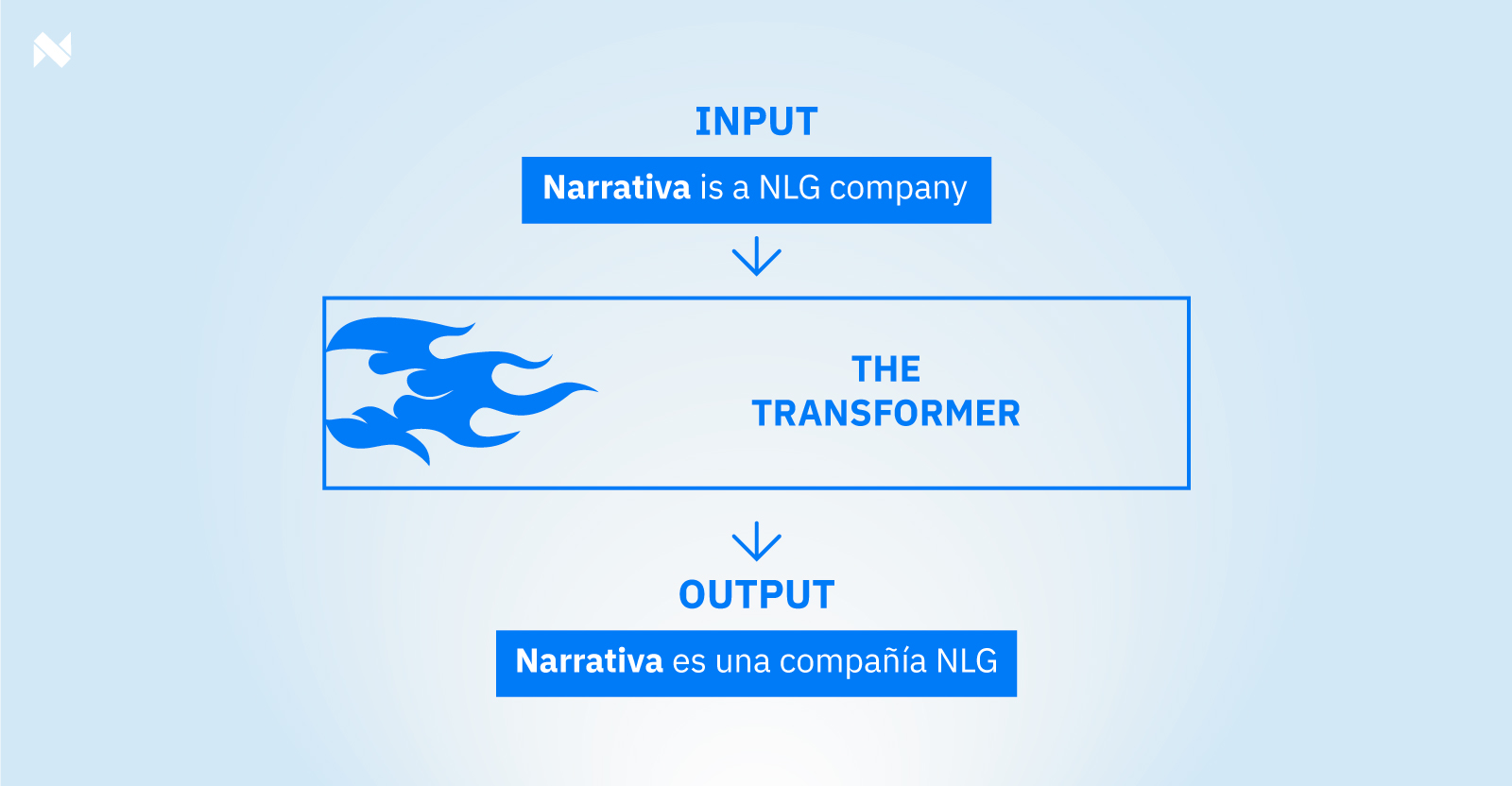

Transformers are used for specific problems: for example, to summarize or to translate.

Transformer used for translation

Let’s say it all has to do with attention. The attention mechanism provides context for any position in the input sequence. For example, if the input data is a natural language sentence, the transformer doesn’t need to process the beginning of the sentence before the end; it identifies the context that gives meaning to each word in the sentence.

What do we use transformers for at Narrativa?

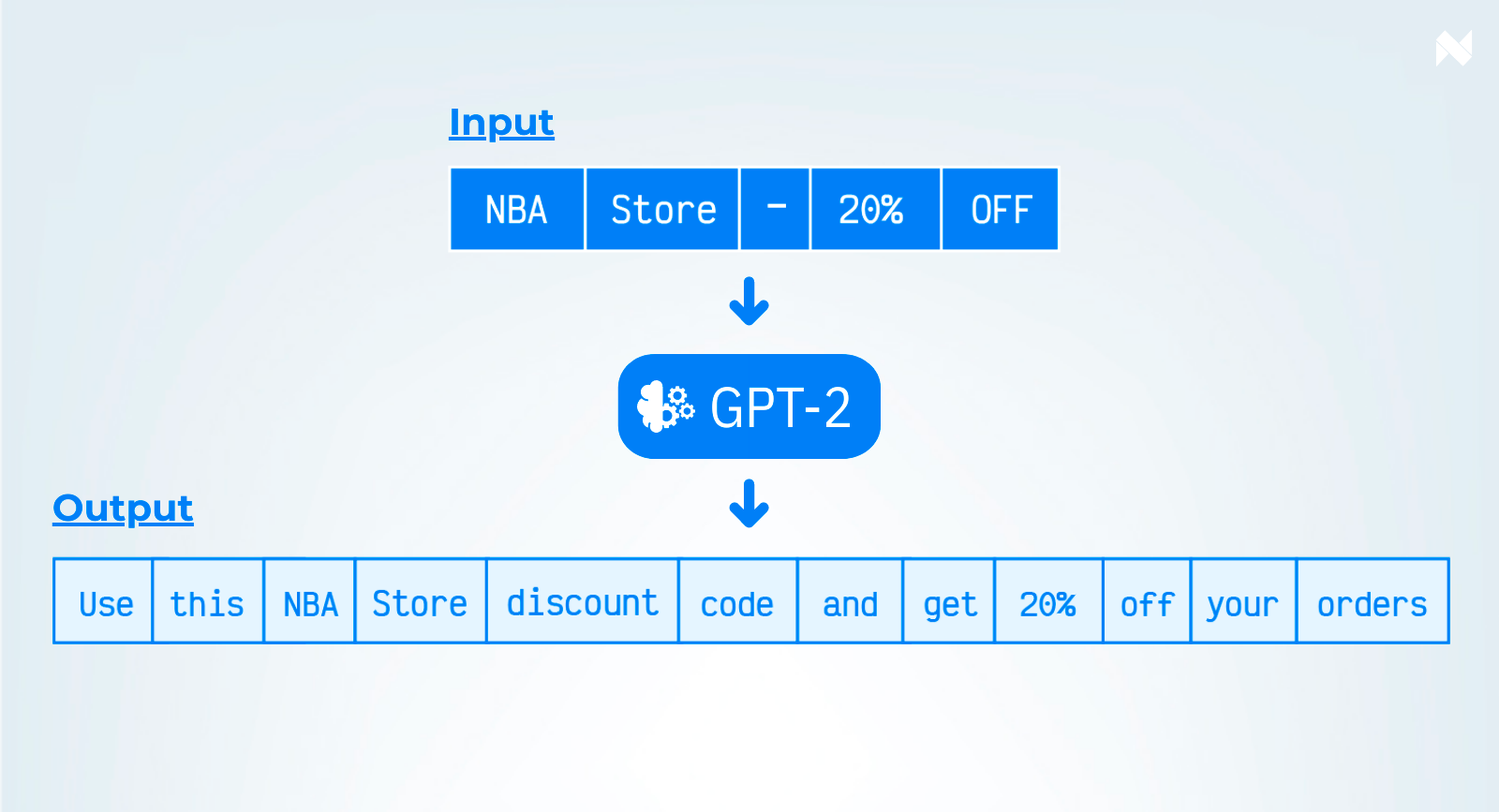

Narrativa’s IT team uses transformers on a daily basis. For example, we use them in the automatic generation of e-commerce content.

This is what we can do for the advertising industry!

You can take a look at the technology we use at this link.

Was that a lot to digest? …Or are you still hungry for more information? Either way, we’d be happy to discuss further! Get in touch today!

Share