November 4, 2022

FLAN-T5, a yummy model superior to GPT-3

By Sofía Sánchez González

Sometimes some artificial intelligence models go unnoticed despite their worth. This is the case with FLAN-T5, a model developed by Google and with a name as appetizing as its NLP power. The California company created a new example of the democratization of artificial intelligence and we explain why. FLAN-T5, a yummy model superior to GPT-3.

What is new about FLAN-T5?

Firstly, we have Google T5 (Text-to-Text Transfer Transformer). T5 consists of transformer-based architecture that uses a text-to-text approach and is the epitome of encoder-decoder excellence in the world of natural language processing (NLP).

But you may be wondering; Google T5 came out a few years ago did it not? What makes the FLAN T5 model so yummy and unique?

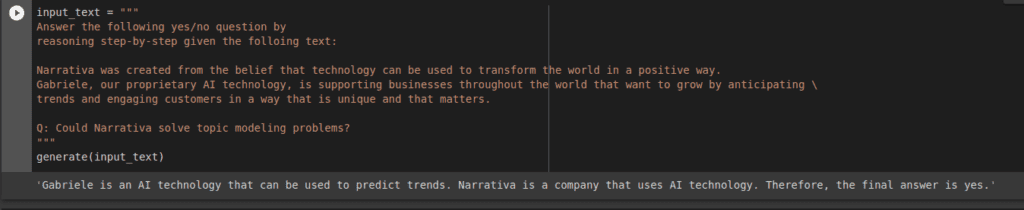

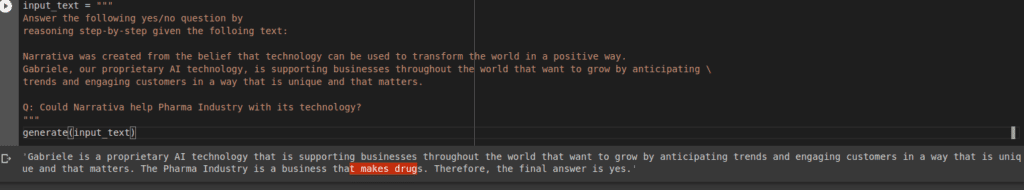

The main novelty is that the model reasons for itself. We can say “Tell me yes or no to this question and also explain your answer”, just like in school exams. I wish we had FLAN-T5 back then!

It is not a ‘mechanical’ model that simply learns by heart. It is capable of breaking down the text and reasoning on it thanks to the datasets that have been used in the training. It is not the typical Question-Answering model that simply answers you.

Why is it superior to GPT-3?

For several reasons:

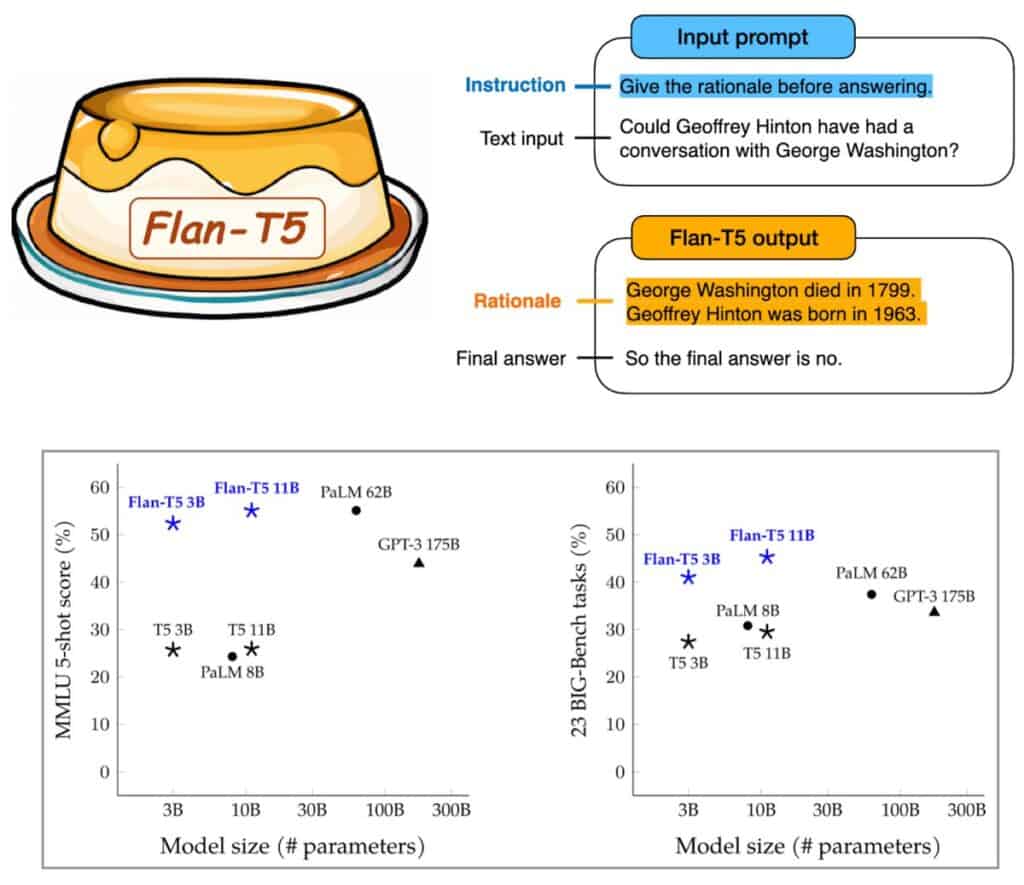

- GPT-3 is a model with a high degree of popularity, but to test it and use it correctly, we need a huge computing budget that can seldom be found in a regular home. We need power in our computers that is not easy to get. However, FLAN-T5 does not need large devices because its smaller models/checkpoints are created for the common citizen.

- It detects sarcasm and is very intuitive. It is able to reinterpret the questions.

- Tested with an input of 5 examples into FLAN-T5 XL (5-shot), the 3 billion model outperforms GPT-3. In fact, there are not many examples to give it and he is very good with the zero-shot.

Our NLP engineer Manuel Romero, who has already tested the model, sums it up for us like this: “It is one of the smallest models (3B params) with the most Natural Language Understanding that I have seen in my years of experience in the world of NLP”.

All the tasks you can imagine

Google has developed and released this model in 5 versions (see here):

- Flan-T5 small

- Flan-T5-base

- Flan-T5-large

- Flan-T5-XL

- Flan-T5 XXL

If you want concrete examples of what you can do with FLAN-T5, here they are:

- Translate between several languages (more than 60 languages). In fact, Spanish is among the majority as it is the second most used language for training.

- Summaries.

- FLAN-T5 answers general questions: “how many minutes should I cook my egg?”

- Also to historical questions (or even of the future)

- FLAN-T5 is capable of solving math problems when giving the reasoning.

Of course, not all are advantages. FLAN-T5 doesn’t calculate the results very well when our format deviates from what it knows. The smaller the checkpoint the less general info is retained. But there are so many good things that this Google model offers us that we can’t forget about that.

Want to try it?

No problem. We have tested it with Google Colab and we find it very powerful because we have not had to fine-tune it.

We enclose the Colab in this link so you can try it and do your own research.

Also, here you have the demo of Hugging Face.

Tell us what you think!

About Narrativa

Narrativa is an internationally recognized content services company that uses its proprietary artificial intelligence and machine learning platforms to build and deploy digital content solutions for enterprises. Its technology suite, consisting of data extraction, data analysis, natural language processing (NLP) and natural language generation (NLG) tools, all seamlessly work together to power a lineup of smart content creation, automated business intelligence reporting and process optimization products for a variety of industries.

Contact us to learn more about our solutions!

Share