August 25, 2020

GPT-2 technology for the generation of e-commerce content

By Narrativa Staff

A few weeks ago, we told you about GPT-2 technology, which has already been eclipsed by the launch of a newer version. However, this model is far from obsolete. Let us tell you about another case study to illustrate what digital transformation looks like first-hand.

Content for e-commerce can be a real headache for some companies and creating manual descriptions for thousands of product listings involves a great deal of time and money.

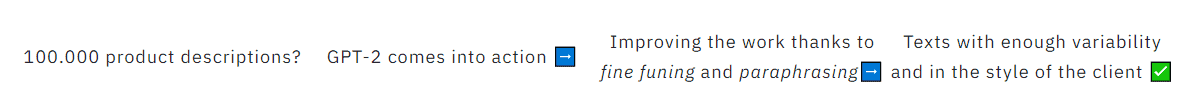

A customer in Europe came to us with this problem a few months ago. Their platform is dedicated to savings opportunities, rewards and information on purchases and they had to write 100,000 descriptions to send to their customers by email. We suggested using GPT-2 to generate them automatically.

This is what we proposed:

Why use GPT-2 and not GPT-3?

Developers from all over the world have been blown away by GPT-3, the latest model launched by OpenAI has impressive capabilities and has been trained with 300 billion words. So, why even bother with GPT-2? Because it has one key advantage; it can be ‘retrained’ easier as it can focus on a more specific domain. Both versions are trained with a variety of text to learn the intrinsic variables of a language, but GPT-2 is much more suited for this purpose.

GPT-3 is huge: it already knows everything. The 100,000 examples that our client brought to us pale in comparison to the 300 billion parameters that it already possesses.

As in real life, the training of a neural network is based on mistakes and failures. The system is penalized every time it fails, using a mathematical formula to correct the error. GPT models are based on predicting the next word in a sentence. The algorithm determines how close the word is to the expected result and adjusts the parameters accordingly.

The great thing about GPT-2 is that it can learn domain specific patterns. So, if we train it in the language of the Bible, it will learn to write in a biblical style. If we train it with rap lyrics, it will learn to write like a rapper.

How does GPT-2 know what our customer wants?

In our case study, the client wanted the first word in all of the 100,000 descriptions to be in capitals. The client’s text all had to be rewritten based on this style request, so we just ‘forced’ the model so that these words were written in capital letters.

By fine tuning the training in this way, GPT starts to learn what is required. By making small adjustments, we can always adapt the text to the client’s interests.

This fine tuning did not just involve adding capital letters; the text also had to be similar but not repetitive. Here, paraphrasing comes into play. This is another common NLP technique that consists of rewriting text based on a request. Although there are thousands of categories in the descriptions, these two techniques will ensure that:

- The text is in the style that the client requested

- They have enough variability between them and are not all the same

- Tedious and repetitive work is no longer done by the workforce

For this task, we used Azure technology and its NC-series machine, which we have access to because we belong to its startup program.

Here is an example:

FROM DATA:

Monster Supplements |||| 10% |||| OFF |||| 10% OFF Muscle Mousse |||| Get 10% OFF Muscle Mousse at Monster Supplements;We reserve the right to pause, cancel or amend this promotion at any time, without prior notification.;United Kingdom

TO TEXT:

Score 10% off Muscle Mousse orders by using this Monster Supplement voucher code

Monster Supplements Promo Code: 10% Off Muscle Mousse Products

At Narrativa, we adapt our technology to the needs of each client, as content for e-commerce varies from one sector to another. However, the benefits of process and task automation are applicable to any client and any sector.

Share