August 30, 2021

Speed up the reading of your reports with Narrativa’s Question Answering model

By Sofía Sánchez González

Have you ever found yourself reading a report over and over again without any luck in finding the information you were looking for? Surely this has happened to you… and not only with regard to work, but maybe while reading the newspaper or an academic paper. Enter Narrativa’s perfect solution to speed up the reading of text.

More than 100 languages

At Narrativa we continue to innovate with the creation of new NLP models through our Hugging Face platform. A few months ago, we announced the creation of the first large-scale model for Question Answering (or QA) covering social media. A model that, unlike others, understands the language used on the networks themselves.

Now we’re taking it a step further! With an even more powerful dataset, we have fine-tuned the mT5 (multilingual T5) model for QA tasks.

How does the model work?

Let’s imagine a report on your company’s financial results over the last 15 years; it’s more than 50 pages with all kinds of details about the movements and steps taken by the company, such as mergers and sales for instance. You want to know in what year a certain merger occurred.

How do you get the info you need fast? Our QA model! We pass the report to our model and ask the question “In what year did the merger with X company take place?” Without having to fully read the 50 pages, the model created by Narrativa gives us the answer quicker than you can snap your finger. We will divide the report into several paragraphs and we will get to know the answer.

Here’s another example for all those who keep up on matters related to artificial intelligence. If you’re looking for information about transformers, for example, but don’t have the time to fully review it yourself, you can use the abstract of a transformers article as an input and ask what new features this architecture introduces. This could be very useful for thesis students!

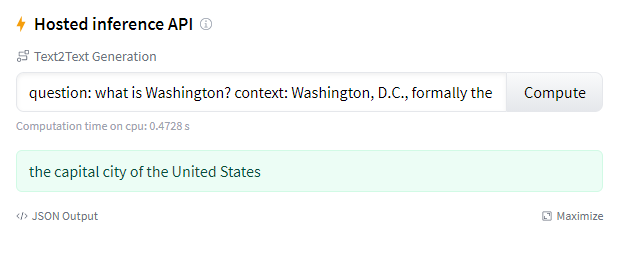

You can try it on our Hugging Face profile.

Try our model!

What if we want to generate a question and not an answer?

No problem; you can test that here.

In summation so far:

- The QA model is trained by adding the question plus the context if we want to guess the answer.

- The QG model is trained by including response plus context.

What kind of technology do we use?

Our model is based on Google’s T5, and although it must first be tweaked, we cover such varied languages ranging from Afrikaans, Azerbaijani, Chichewa, Corsican and even Esperanto.

Can click bait be avoided in the future?

How many times have we come across what we thought was news only to later realize it was click bait? With the further development of our model, we could know the answer we are looking for without even having to click the link. But don’t worry, we’ll keep you updated as this progresses!

At Narrativa we have this and many more solutions to streamline the day-to-day operations of your company. If you’re not sure how we can help make processes more efficacious or simply want to learn more, contact us at [email protected].

Share